Nitheesh NH

The Coresight Research team attended the first day of the Bengaluru Tech Summit on November 29. This was the 21st edition of the flagship event of the Department of Information Technology, Government of Karnataka (Bengaluru is Karnataka State’s capital city). The Chief Minister of Karnataka, H.D. Kumaraswamy, kicked off the event with a grand inaugural ceremony that included other ministers of the state; delegates from Australia, France and Estonia; and industry leaders.

Our team attended keynote presentations and panel discussions led by industry experts. Here, we share our top takeaways from the first day of the summit.

There Is a Significant Shortage of Talent in the AI and Cybersecurity Sectors

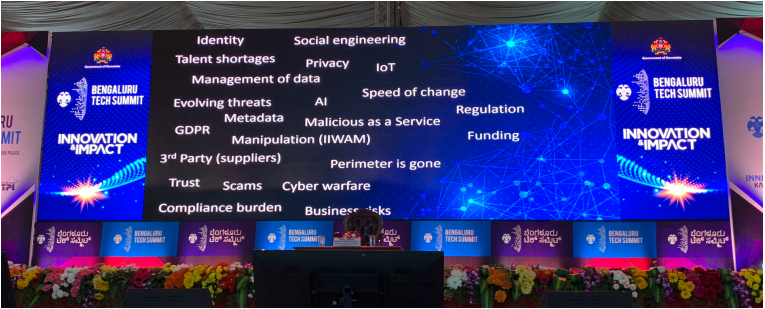

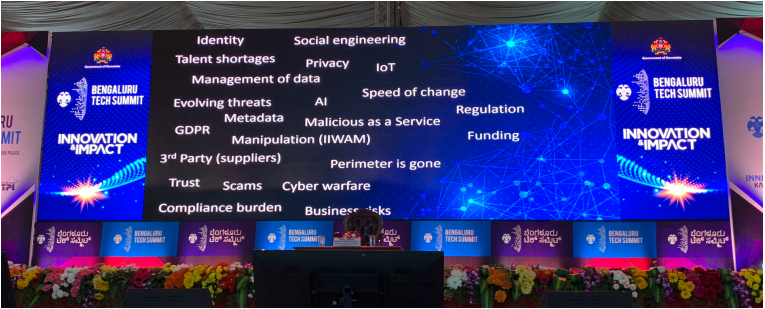

In a keynote address, Damien Manuel, Director of the Centre for Cyber Security Solutions at Deakin University in Australia, summarized the predominant threats in cybersecurity across industries and the hurdles to mitigating them. Manuel spoke about cyber-enabled information and influence warfare, and noted that manipulation is the latest form of threat, as a growing number of people consume news through social media. Some 69% of Americans consume news through social media channels rather than through traditional sources, he said.

Manuel stated that regulatory hurdles and inadequate funds are some of the issues impeding firms from deploying cybersecurity measures. He added that key challenges to implementing cybersecurity architecture include a shortage of experts and the inability to upskill or reskill people fast enough to keep up with the pace of change in the industry.

[caption id="attachment_93740" align="aligncenter" width="700"] Presentation slide showing the challenges to implementing cybersecurity measures Source: Coresight Research[/caption]

Gaurav Sharma, VP of IBM India Software Labs and India/South Asia Growth, expressed similar thoughts on the shortage of talent in his keynote, “Emerging Opportunities and Challenges in AI.” Sharma remarked that when technology providers are asked to implement AI solutions for large enterprises, reskilling the workforce is not always the best first move. Providers first need to work on understanding the culture of an organization in order to resolve any concerns staff may have about AI, and then work to deploy AI-powered technological infrastructure.

Abhinav Johri, Director for Global Digital Transformation Learning at Ernst & Young, echoed this thought. Johri stated that, most of the time, AI is defined in a manner convenient to the user and that rather than reskilling an entire workforce, it may be best to first work on informing education providers and business decision makers about the knowledge and skills needed to work with AI-enabled technology systems, and then have the knowledge trickle down to students and workers.

Improving AI Applications for India Is a Challenge, as Algorithms Need Vast Masses of Data

In a panel discussion about the challenges inherent in building AI systems for India and solving problems on a national scale, Karan Makhija, CEO and Cofounder of Intellicar, a company that creates smart fleet-management systems, said that a main challenge to building out AI applications for India is not having all of the data needed to create efficient programs. Makhija commented that AI algorithms get better with more data and that expansive data pools are currently inadequate, limiting the efficiency of applications.

Data Need to Be Better Integrated and Not Exist in Siloes

In the same panel discussion, Suresh Kumar, GM and Head of Smart Cities at German engineering firm Robert Bosch, said that related data need to integrate better and not exist in siloes. He stated examples of how data from bus services and taxi fleets in a particular city in India are difficult to integrate, as the data collected by buses versus taxis are not organized. Kumar said that the computation involved in integrating these data to enable better traffic management requires complex programs and very powerful machines.

Data Need to Be of Better Quality to Produce Effective Results

Sharma discussed the four Vs of data—velocity, volume, variety and veracity—and emphasized that the last V, which refers to the accuracy or quality of data, can greatly influence the way an AI application behaves. He said that one may be able to collect masses, as well as a broad variety, of data very quickly, but that picking out quality data from the whole set is an onerous task.

To illustrate this point, Sharma cited an experiment carried out by the US Defense Advanced Research Projects Agency (DARPA). The agency’s goal was to make an AI application distinguish between pictures of wolves and pictures of huskies. The AI system identified several images containing a wolf, but also inaccurately identified a husky as a wolf in some images. DARPA found that the data previously fed to the machine contained images of wolves with snow in the background, but the pictures of huskies did not always have snow in the background. Thus, whenever the system saw an image with snow, it identified it as containing an image of a wolf, even when the animal in the image was a husky.

Sharma said that some 70%–80% of one’s time is spent finding the right kind of data to work on. So, extracting better-quality data will enable AI-powered systems to deliver better results.

Presentation slide showing the challenges to implementing cybersecurity measures Source: Coresight Research[/caption]

Gaurav Sharma, VP of IBM India Software Labs and India/South Asia Growth, expressed similar thoughts on the shortage of talent in his keynote, “Emerging Opportunities and Challenges in AI.” Sharma remarked that when technology providers are asked to implement AI solutions for large enterprises, reskilling the workforce is not always the best first move. Providers first need to work on understanding the culture of an organization in order to resolve any concerns staff may have about AI, and then work to deploy AI-powered technological infrastructure.

Abhinav Johri, Director for Global Digital Transformation Learning at Ernst & Young, echoed this thought. Johri stated that, most of the time, AI is defined in a manner convenient to the user and that rather than reskilling an entire workforce, it may be best to first work on informing education providers and business decision makers about the knowledge and skills needed to work with AI-enabled technology systems, and then have the knowledge trickle down to students and workers.

Improving AI Applications for India Is a Challenge, as Algorithms Need Vast Masses of Data

In a panel discussion about the challenges inherent in building AI systems for India and solving problems on a national scale, Karan Makhija, CEO and Cofounder of Intellicar, a company that creates smart fleet-management systems, said that a main challenge to building out AI applications for India is not having all of the data needed to create efficient programs. Makhija commented that AI algorithms get better with more data and that expansive data pools are currently inadequate, limiting the efficiency of applications.

Data Need to Be Better Integrated and Not Exist in Siloes

In the same panel discussion, Suresh Kumar, GM and Head of Smart Cities at German engineering firm Robert Bosch, said that related data need to integrate better and not exist in siloes. He stated examples of how data from bus services and taxi fleets in a particular city in India are difficult to integrate, as the data collected by buses versus taxis are not organized. Kumar said that the computation involved in integrating these data to enable better traffic management requires complex programs and very powerful machines.

Data Need to Be of Better Quality to Produce Effective Results

Sharma discussed the four Vs of data—velocity, volume, variety and veracity—and emphasized that the last V, which refers to the accuracy or quality of data, can greatly influence the way an AI application behaves. He said that one may be able to collect masses, as well as a broad variety, of data very quickly, but that picking out quality data from the whole set is an onerous task.

To illustrate this point, Sharma cited an experiment carried out by the US Defense Advanced Research Projects Agency (DARPA). The agency’s goal was to make an AI application distinguish between pictures of wolves and pictures of huskies. The AI system identified several images containing a wolf, but also inaccurately identified a husky as a wolf in some images. DARPA found that the data previously fed to the machine contained images of wolves with snow in the background, but the pictures of huskies did not always have snow in the background. Thus, whenever the system saw an image with snow, it identified it as containing an image of a wolf, even when the animal in the image was a husky.

Sharma said that some 70%–80% of one’s time is spent finding the right kind of data to work on. So, extracting better-quality data will enable AI-powered systems to deliver better results.

Presentation slide showing the challenges to implementing cybersecurity measures Source: Coresight Research[/caption]

Gaurav Sharma, VP of IBM India Software Labs and India/South Asia Growth, expressed similar thoughts on the shortage of talent in his keynote, “Emerging Opportunities and Challenges in AI.” Sharma remarked that when technology providers are asked to implement AI solutions for large enterprises, reskilling the workforce is not always the best first move. Providers first need to work on understanding the culture of an organization in order to resolve any concerns staff may have about AI, and then work to deploy AI-powered technological infrastructure.

Abhinav Johri, Director for Global Digital Transformation Learning at Ernst & Young, echoed this thought. Johri stated that, most of the time, AI is defined in a manner convenient to the user and that rather than reskilling an entire workforce, it may be best to first work on informing education providers and business decision makers about the knowledge and skills needed to work with AI-enabled technology systems, and then have the knowledge trickle down to students and workers.

Improving AI Applications for India Is a Challenge, as Algorithms Need Vast Masses of Data

In a panel discussion about the challenges inherent in building AI systems for India and solving problems on a national scale, Karan Makhija, CEO and Cofounder of Intellicar, a company that creates smart fleet-management systems, said that a main challenge to building out AI applications for India is not having all of the data needed to create efficient programs. Makhija commented that AI algorithms get better with more data and that expansive data pools are currently inadequate, limiting the efficiency of applications.

Data Need to Be Better Integrated and Not Exist in Siloes

In the same panel discussion, Suresh Kumar, GM and Head of Smart Cities at German engineering firm Robert Bosch, said that related data need to integrate better and not exist in siloes. He stated examples of how data from bus services and taxi fleets in a particular city in India are difficult to integrate, as the data collected by buses versus taxis are not organized. Kumar said that the computation involved in integrating these data to enable better traffic management requires complex programs and very powerful machines.

Data Need to Be of Better Quality to Produce Effective Results

Sharma discussed the four Vs of data—velocity, volume, variety and veracity—and emphasized that the last V, which refers to the accuracy or quality of data, can greatly influence the way an AI application behaves. He said that one may be able to collect masses, as well as a broad variety, of data very quickly, but that picking out quality data from the whole set is an onerous task.

To illustrate this point, Sharma cited an experiment carried out by the US Defense Advanced Research Projects Agency (DARPA). The agency’s goal was to make an AI application distinguish between pictures of wolves and pictures of huskies. The AI system identified several images containing a wolf, but also inaccurately identified a husky as a wolf in some images. DARPA found that the data previously fed to the machine contained images of wolves with snow in the background, but the pictures of huskies did not always have snow in the background. Thus, whenever the system saw an image with snow, it identified it as containing an image of a wolf, even when the animal in the image was a husky.

Sharma said that some 70%–80% of one’s time is spent finding the right kind of data to work on. So, extracting better-quality data will enable AI-powered systems to deliver better results.

Presentation slide showing the challenges to implementing cybersecurity measures Source: Coresight Research[/caption]

Gaurav Sharma, VP of IBM India Software Labs and India/South Asia Growth, expressed similar thoughts on the shortage of talent in his keynote, “Emerging Opportunities and Challenges in AI.” Sharma remarked that when technology providers are asked to implement AI solutions for large enterprises, reskilling the workforce is not always the best first move. Providers first need to work on understanding the culture of an organization in order to resolve any concerns staff may have about AI, and then work to deploy AI-powered technological infrastructure.

Abhinav Johri, Director for Global Digital Transformation Learning at Ernst & Young, echoed this thought. Johri stated that, most of the time, AI is defined in a manner convenient to the user and that rather than reskilling an entire workforce, it may be best to first work on informing education providers and business decision makers about the knowledge and skills needed to work with AI-enabled technology systems, and then have the knowledge trickle down to students and workers.

Improving AI Applications for India Is a Challenge, as Algorithms Need Vast Masses of Data

In a panel discussion about the challenges inherent in building AI systems for India and solving problems on a national scale, Karan Makhija, CEO and Cofounder of Intellicar, a company that creates smart fleet-management systems, said that a main challenge to building out AI applications for India is not having all of the data needed to create efficient programs. Makhija commented that AI algorithms get better with more data and that expansive data pools are currently inadequate, limiting the efficiency of applications.

Data Need to Be Better Integrated and Not Exist in Siloes

In the same panel discussion, Suresh Kumar, GM and Head of Smart Cities at German engineering firm Robert Bosch, said that related data need to integrate better and not exist in siloes. He stated examples of how data from bus services and taxi fleets in a particular city in India are difficult to integrate, as the data collected by buses versus taxis are not organized. Kumar said that the computation involved in integrating these data to enable better traffic management requires complex programs and very powerful machines.

Data Need to Be of Better Quality to Produce Effective Results

Sharma discussed the four Vs of data—velocity, volume, variety and veracity—and emphasized that the last V, which refers to the accuracy or quality of data, can greatly influence the way an AI application behaves. He said that one may be able to collect masses, as well as a broad variety, of data very quickly, but that picking out quality data from the whole set is an onerous task.

To illustrate this point, Sharma cited an experiment carried out by the US Defense Advanced Research Projects Agency (DARPA). The agency’s goal was to make an AI application distinguish between pictures of wolves and pictures of huskies. The AI system identified several images containing a wolf, but also inaccurately identified a husky as a wolf in some images. DARPA found that the data previously fed to the machine contained images of wolves with snow in the background, but the pictures of huskies did not always have snow in the background. Thus, whenever the system saw an image with snow, it identified it as containing an image of a wolf, even when the animal in the image was a husky.

Sharma said that some 70%–80% of one’s time is spent finding the right kind of data to work on. So, extracting better-quality data will enable AI-powered systems to deliver better results.